There has been much speculation recently about a possible rift in Internet governance. Essentially, many countries resent the US government’s control over the Internet’s policy oversight. They advocate the transfer of those responsibilities to the International Telecommunications Union (ITU), a more multilateral venue. The big news is that the European Union, which previously sat on the fence, came out strongly in favor of this proposal. Unsurprisingly, the US government is hostile to it. More surprisingly, I agree with their unilateralist impulse, obviously for very different reasons. I was planning on writing up a technical explanation as most of the IT press has it completely wrong, as usual, but Eric Rescorla has beaten me to the punch with an excellent summary.

Many commentators have made much hay of the fact the ITU is under the umbrella of the United Nations. The Bush administration is clearly reticent, to say the least, towards the UN, but that is a fairly widespread sentiment among the American policy establishment, by no means limited to Republicans. For some reason, many Americans harbor the absurd fear that somehow the UN is plotting against US sovereignty. Of course, the reality is the UN cannot afford its parking tickets, let alone black helicopters. American hostility towards the UN is curious, as it was the brainchild of a US president, Franklin D. Roosevelt, its charter was signed in San Francisco (at Herbst Theatre, less than a mile from where I live), and it is headquartered in New York.

The UN is ineffective and corrupt, but that is because the powers on the Security Council want it that way. The UN does not have its own army and depends on its member nations, specially those on the Security Council to perform its missions. It is hardly fair to lay the blame for failure in Somalia on the UN’s doorstep. As for corruption, mostly in the form of patronage, it was the way the US and the USSR greased the wheels of diplomacy during the Cold War, buying the votes of tin-pot nations by granting cushy UN jobs to the nephews of their kleptocrats.

A more damning condemnation of the UN is the fact the body does not embody any kind of global democratic representation. The principle is one country, one vote. Just as residents of Wyoming have 60 times more power per capita in the US Senate than Californians, India’s billion inhabitants have as many votes in the General Assembly as those of the tiny Grand Duchy of Liechtenstein. The real action is in the Security Council anyways, but they are not fully represented there either. Had Americans not had a soft spot for Chiang Kai-Shek, China, with its own billion souls, would not have a seat at that table either. That said, the Internet population is spread unevenly across the globe, and the Security Council is probably more representative of it.

In any case, the ITU was established in 1865, long before the UN, and its institutional memory is much different. It is also based in Geneva, like most international organizations, geographically and culturally a world away from New York. In other words, even though it is formally an arm of the UN, the ITU is in practice completely autonomous. The members of the Security Council do not enjoy veto rights in the ITU, and the appointment of its secretary general, while a relatively technocratic and unpoliticized affair, is not subject to US approval, or at least acquiescence, the way the UN secretary-general’s is, or that of more sensitive organizations like the IAEA.

My primary objections to the ITU are not about its political structure, governance or democratic legitimacy, but about its competence, or more precisely the lack of it. The ITU is basically the forum where government PTT monopolies meet incumbent telcos to devise big standards and blow big amounts of hot air. Well into the nineties, they were pushing for a bloated network architecture called OSI, as an alternative to the Internet’s elegant TCP/IP protocol suite. I was not surprised — I used to work at France Télécom’s R&D labs, and had plenty of opportunity to gauge the “caliber” of the incompetent parasites who would go on ITU junkets. Truth be said, those people’s chief competency is bureaucratic wrangling, and like rats leaving a ship, they have since decamped to the greener pastures of the IETF, whose immune system could not prevent a dramatic drop in the quality of its output. The ITU’s institutional bias is towards complex solutions that enshrine the role of legacy telcos, managed scarcity and self-proclaimed intelligent networks that are architected to prevent disruptive change by users on the edge.

When people hyperventilate about Internet governance, they tend to focus on the Domain Name System, even though the real scandal is IPv4 address allocation, like the fact Stanford and MIT each have more IP addresses allocated to them than all of China. Many other hot-button items like the fight against child pornography or pedophiles more properly belongs in criminal-justice organizations like Interpol. But let us humor the pundits and focus on the DNS.

First of all, the country-specific top-level domains like .fr, .cn or the new kid on the block, .eu, are for all practical purposes already under decentralized control. Any government that is afraid the US might tamper with its own country domain (for some reason Brazil is often mentioned in this context) can easily take measures to prevent disruption of domestic traffic by requiring its ISPs to point their DNS servers to authoritative servers under its control for that zone. Thus, the area of contention is really the international generic top-level domains (gTLDs), chief of all .com, the only one that really matters.

What is the threat model for a country that is distrustful of US intentions? The possibility that the US government might delete or redirect a domain it does not like, say, al-qaeda.org? Actually, this happens all the time, not due to the malevolence of the US government, but to the active incompetence of Network Solutions (NSI). You may recall NSI, now a division of Verisign, is the entrenched monopoly that manages the .com top-level domain, and which has so far successfully browbeaten ICANN into prolonging its monopoly, one of its most outrageous claims being that it has intellectual property rights to the .com database. Their security measures, on the other hand, owe more to Keystone Kops, and they routinely allow domain names like sex.com to be hijacked. Breaking the NSI monopoly would be a worthwhile policy objective, but it does not require a change in governance, just the political will to confront Verisign (which, granted, may be more easily found outside the US).

This leads me to believe the root cause for all the hue and cry, apart from the ITU angling for relevance, may well be the question of how the proceeds from domain registration fees are apportioned. Many of the policy decisions concerning the domain name system pertain to the creation of new TLDs like .museum or, more controversially, .xxx. The fact is, nobody wakes up in the middle of the night thinking: “I wish there were a top-level domain .aero so I could reserve a name under it instead of my lame .com domain!”. All these alternative TLDs are at best poor substitutes for .com. Registrars, on the other hand, who provide most of the funding for ICANN, have a vested interest in the proliferation of TLDs, as that gives them more opportunities to collect registration fees.

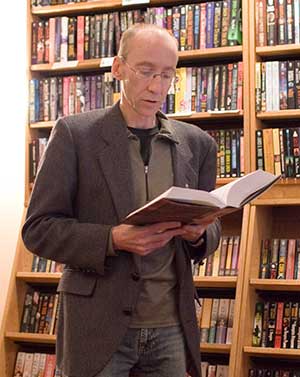

I reviewed the Malazan Book of the Fallen last year — it is one of the very finest Fantasy series, in my opinion. I met Steven Erikson today during a book signing at Borderlands Books in San Francisco. Sadly, there were enough people in the audience who had not read all first five volumes that he read from Memories of Ice rather than from the final manuscript of the sixth volume, The Bonehunters (due out in February 2006) that he carries with him on his Palm PDA.

I reviewed the Malazan Book of the Fallen last year — it is one of the very finest Fantasy series, in my opinion. I met Steven Erikson today during a book signing at Borderlands Books in San Francisco. Sadly, there were enough people in the audience who had not read all first five volumes that he read from Memories of Ice rather than from the final manuscript of the sixth volume, The Bonehunters (due out in February 2006) that he carries with him on his Palm PDA.