Parallelizing the command-line

Single-thread processor performance has stalled for a few years now. Intel and AMD have tried to compensate by multiplying cores, but the software world has not risen to the challenge, mostly because the problem is a genuinely hard one.

Shell scripts are still usually serial, and increasingly at odds with the multi-core future of computing. Let’s take a simple task as an example, converting a large collection of images from TIFF to JPEG format using a tool like ImageMagick. One approach would be to spawn a convert process per input file as follows:

#!/bin/sh

for file in *.tif; do

convert $file `echo $file|sed -e 's/.tif$/.jpg/g' &

done

This does not work. If you have many TIFF files to convert (what would be the point of parallelizing if that were not the case?), you will fork off too many processes, which will contend for CPU and disk I/O bandwidth, causing massive congestion and degrading performance. What you want is to have only as many concurrent processes as there are cores in your system (possibly adding a few more because a tool like convert is not 100% efficient at using CPU power). This way you can tap into the full power of your system without overloading it.

The GNU xargs utility gives you that power using its -P flag. xargs is a UNIX utility that was designed to work around limits on the maximum size of a command line (usually 256 or 512 bytes). Instead of supplying arguments over the command-line, you supply them as the standard input of xargs, which then breaks them into manageable chunks and passes them to the utility you specify.

The -P flag to GNU xargsspecifies how many concurrent processes can be running. Some other variants of xargs like OS X’s non-GNU (presumably BSD) xargs also support -P but not Solaris’. xargs is very easy to script and can provide a significant boost to batch performance. The previous script can be rewritten to use 4 parallel processes:

#!/bin/sh

CPUS=4

ls *.tif|sed -e 's/.tif$//g'|gxargs -P $CPUS -n 1 -I x convert x.tif x.jpg

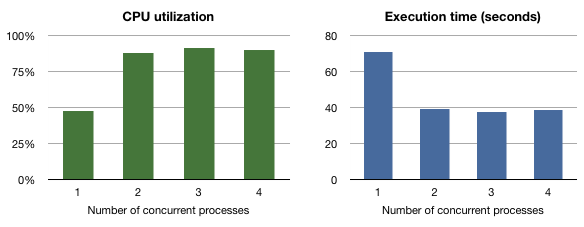

On my Sun Ultra 40 M2 (2x 1.8GHz AMD Opterons, single-core), I benchmarked this procedure against 920MB of TIFF files. As could be expected, going from 1 to 2 concurrent processes improved throughput dramatically, going from 2 to 3 yielded marginal improvements (convert is pretty good at utilizing CPU to the max). Going from 3 to 4 actually degraded performance, presumably due to the kernel overhead of managing the contention.

Another utility that is parallelizable is GNU make using the -j flag. I parallelize as many of my build procedures as possible, but for many open-source packages, the usual configure step is not parallelized (because configure does not really understand the concept of dependencies). Unfortunately there are too many projects whose makefiles are missing dependencies, causing parallelized makes to fail. In this day and age of Moore’s law running out of steam as far as single-task performance is concerned, harnessing parallelism using gxargs -P or gmake -j is no longer a luxury but should be considered a necessity.